티스토리 뷰

Linear Regression의 cost 최소화의 TensorFlow 구현¶

cost함수는 어떻게 생겼을까?¶

In [6]:

import tensorflow as tf

import matplotlib.pyplot as plt

X = [1,2,3]

Y = [1,2,3]

W =tf.placeholder(tf.float32)

# Out hypothesis for linear molde X * W

hypothesis = X * W

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

#Launch the graph in a session.

sess = tf.Session()

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

#Variables for plotting cost function

W_val = []

cost_val = []

for i in range(-30,50):

feed_W = i * 0.1

curr_cost, curr_W = sess.run([cost, W], feed_dict={W: feed_W})

W_val.append(curr_W)

cost_val.append(curr_cost)

# Show the cost function

plt.plot(W_val, cost_val)

plt.show()

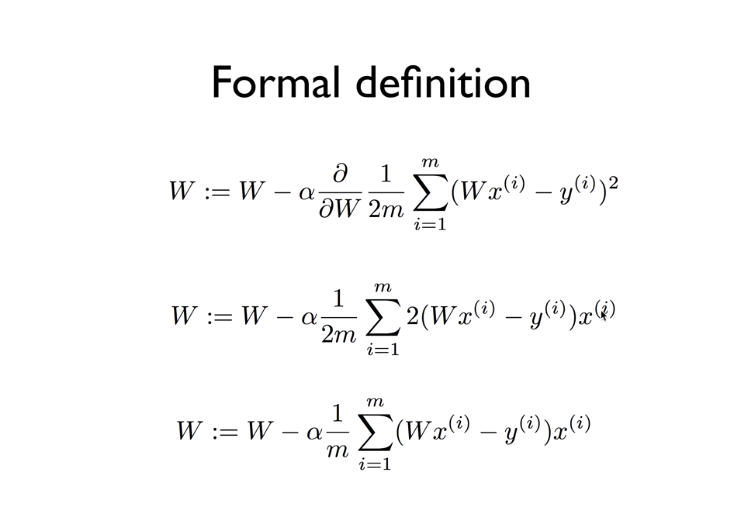

Gradient descent¶

W- 뒷부분을 gradient라 한다.

In [ ]:

# Minimize: Gradient Descent using derivative:

W - = learning_rate * derivative

learing_rate =0.1

gradient = tf.reduce_mean((W * X - Y) * X)

descent= W -learning_rate * gradient

update = W.assign(descent) # 우변의 식이 좌변에 assign한다.

In [8]:

import tensorflow as tf

x_data = [1,2,3]

y_data = [1,2,3]

W = tf.Variable(tf.random_normal([1]), name='weight')

X = tf.placeholder(tf.float32)

Y = tf.placeholder(tf.float32)

# Our hypothesis for linear model X * W

hypothesis = X * W

# cost/loss function

cost = tf.reduce_sum(tf.square(hypothesis - Y))

# Minimize: Gradient Descent using derivative: W -= learning_rate * derivative

learning_rate = 0.1

gradient = tf.reduce_mean((W * X - Y) * X)

descent = W -learning_rate * gradient

update = W.assign(descent)

# Launch the graph in a session.

sess = tf.Session()

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

for step in range(21):

sess.run(update, feed_dict={X: x_data, Y: y_data})

print(step, sess.run(cost, feed_dict={X: x_data, Y: y_data}), sess.run(W))

#업데이트가 잘 되는지 cost와 W값 확인. step, cost, W 순으로 나옴.

cost가 간단했기에 미분함수 ((W X - Y) X)가 간단히 나왔지만 cost가 굉장히 복잡할 수 있어. 그럴때 그냥 다음과 같이 하면 미분하지 않고도 구할 수 있다.¶

In [ ]:

# Minimize: Gradient Descent Magic

optimizer =

tf.train.GradientDescentOptimizer(learning_rate=0.1)

train = optimizer.minimize(cost)

Output when W=5¶

In [10]:

import tensorflow as tf

# tf Graph Input

X = [1,2,3]

Y = [1,2,3]

# Set wrong model weights

W = tf.Variable(5.0)

# Linear model

hypothesis = X * W

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# Minimize: Gradient Descent Magic

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1)

train = optimizer.minimize(cost)

# Launch the graph in a session.

sess = tf.Session()

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

for step in range(10):

print(step, sess.run(W))

sess.run(train)

Output when W=-3¶

In [8]:

import tensorflow as tf

# tf Graph Input

X = [1,2,3]

Y = [1,2,3]

# Set wrong model weights

W = tf.Variable(-3.0)

# Linear model

hypothesis = X * W

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# Minimize: Gradient Descent Magic

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1)

train = optimizer.minimize(cost)

# Launch the graph in a session.

sess = tf.Session()

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

for step in range(10):

print(step, sess.run(W))

sess.run(train)

optional: compute_gradient and apply_gradient¶

만약에 텐서플로우가 주는 gradient를 여러가지 연산을 이용하여 손대고 싶을때 사용

In [1]:

import tensorflow as tf

X = [1,2,3]

Y = [1,2,3]

# Set wrong model weights

W = tf.Variable(5.)

# Linear model

hypothesis = X * W

# Manual gradient

gradient = tf.reduce_mean((W * X - Y) * X) * 2

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01)

# Get gradients

gvs = optimizer.compute_gradients(cost)

# Apply gradients

apply_gradients = optimizer.apply_gradients(gvs)

# Launch the graph in a session.

sess = tf.Session()

sess.run(tf.global_variables_initializer())

for step in range(100):

print(step, sess.run([gradient, W, gvs]))

sess.run(apply_gradients)

kl

'beginner > 파이썬 딥러닝 기초' 카테고리의 다른 글

| Logistic Regression (0) | 2019.05.04 |

|---|---|

| Loading data from file (0) | 2019.05.04 |

| Multi-variable linear regression (0) | 2019.05.03 |

| Tensorflow로 간단한 linear regression 구현 (0) | 2019.05.02 |

| Tensorflow의 기본적인 operation (0) | 2019.05.02 |