Logistic Regression

Logistic Regression

Logistic Regression¶ In [1]: from PIL import Image Image.open('Logistic Regression.png') Out[1]: Training Data¶ In [5]: import tensorflow as tf x_data = [[1, 2], [2, 3], [3, 1], [4, 3], [5, 3], [6, 2]] y_data = [[0], [0], [0], [1], [1], [1]] # placeholders for a tensor that will be always fed. X = tf.placeholder(tf.float32, shape=[None, 2]) Y = tf.placeholder(tf.float32, shape=[None, 1]) W = tf...

Loading data from file

Loading data from file

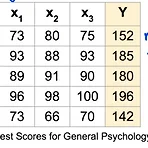

Loading data from file¶ 넘파이를 이용하여 구해보자. In [1]: import numpy as np In [2]: from PIL import Image Image.open('data-01-test-score.png') Out[2]: In [3]: xy = np.loadtxt('data-01-test-score.csv', delimiter=',', dtype=np.float32) # 모두 같은 타입이어야만 가능 x_data = xy[:, 0:-1] y_data = xy[:, [-1]] In [ ]: ##### 슬라이싱 ##### nums = range(5) # range is a built-in function that creates a list of integers print num..

Multi-variable linear regression

Multi-variable linear regression

Multi-variable linear regression¶ In [5]: from PIL import Image Image.open('그림3.png') Out[5]: H(x1,x2,x3) = x1w1 + x2w2 + x3w3¶ In [ ]: import tensorflow as tf x1_data = [73., 93., 89., 96., 73.] x2_data = [80., 88., 91., 98., 66.] x3_data = [75., 93., 90., 100., 70.] y_data = [152., 185., 180., 196., 142.] # placeholders for a tensor that will be always fed. x1 = tf.placeholder(tf.float32) x2 =..

Linear Regression의 cost 최소화의 TensorFlow 구현

Linear Regression의 cost 최소화의 TensorFlow 구현

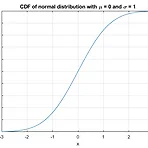

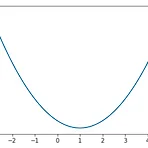

Linear Regression의 cost 최소화의 TensorFlow 구현¶ cost함수는 어떻게 생겼을까?¶ In [6]: import tensorflow as tf import matplotlib.pyplot as plt X = [1,2,3] Y = [1,2,3] W =tf.placeholder(tf.float32) # Out hypothesis for linear molde X * W hypothesis = X * W # cost/loss function cost = tf.reduce_mean(tf.square(hypothesis - Y)) #Launch the graph in a session. sess = tf.Session() # Initializes global variables in th..

Tensorflow로 간단한 linear regression 구현

Tensorflow로 간단한 linear regression 구현

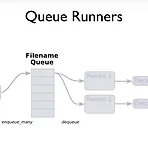

TensorFlow로 간단한 linear regression을 구현¶ (1) Build graph using TensorFlow operations¶ (2) feed data and run graph (operation) :¶ sess.run(op, feed_dict={x:x_data})¶ (3) update variables in the graph (and return values)¶ In [30]: from PIL import Image Image.open('mechanics.png') Out[30]: (1) Build graph using TensorFlow operations¶ H(x) = Wx + b¶ In [1]: import tensorflow as tf In [4]: # X and Y da..

Tensorflow의 기본적인 operation

Tensorflow의 기본적인 operation

In [11]: from PIL import Image Image.open('1번.png') Out[11]: TensorFlow Hello World¶ In [2]: import tensorflow as tf In [3]: tf.__version__ Out[3]: '1.13.1' In [4]: # Create a constant op # This op is added as a node to the defalt graph hello = tf.constant('Hello, TensorFlow!') # seart a TF session sess = tf.Session() # run the op and get result print(sess.run(hello)) b'Hello, TensorFlow!' 어떤 그래..

클래스

클래스

클래스란?¶ 객체지향은 프로그래밍의 꽃이다. 클래스는 객체지향을 구현함에 있어 중요한 부분이다. 클래스는 비슷한 속성을 가진 객체를 묶는 큰 틀이라고 생각하면 된다. 클래스 변수¶ In [1]: class Customer: welcome = '반갑습니다' 클래스 Customer는 '반갑습니다'라는 값을 가진 welcome 라는 변수를 가지고 있다. 이를 호출하려고 하면 해당 클래스의 인스턴트를 통해서만 가능하다. In [2]: Customer.welcome Out[2]: '반갑습니다' 클래스 함수¶ In [5]: class Customer: def info(self, id): print("id : %d" % id) new_Customer = Customer() new_Cust..